Introduction

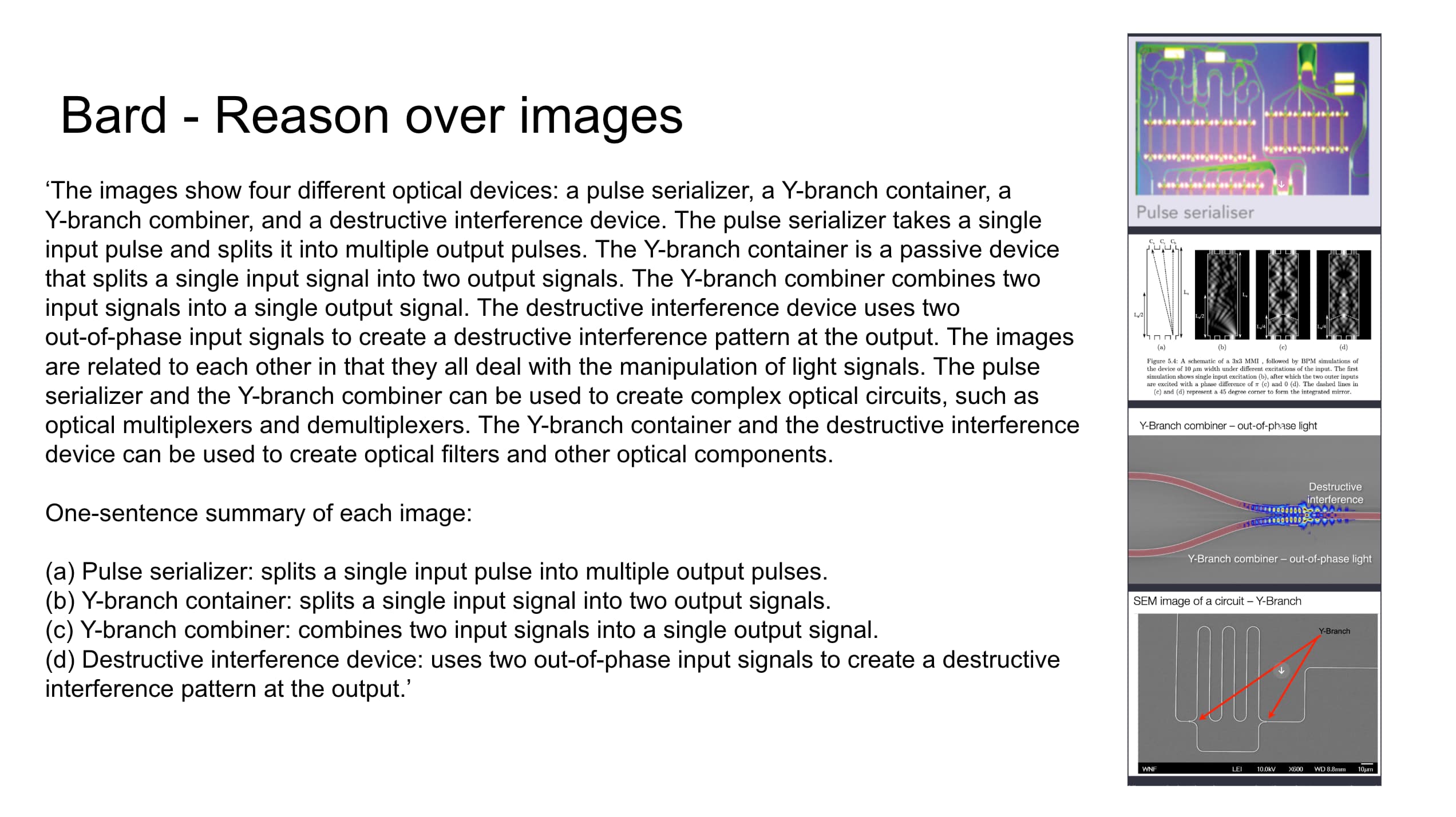

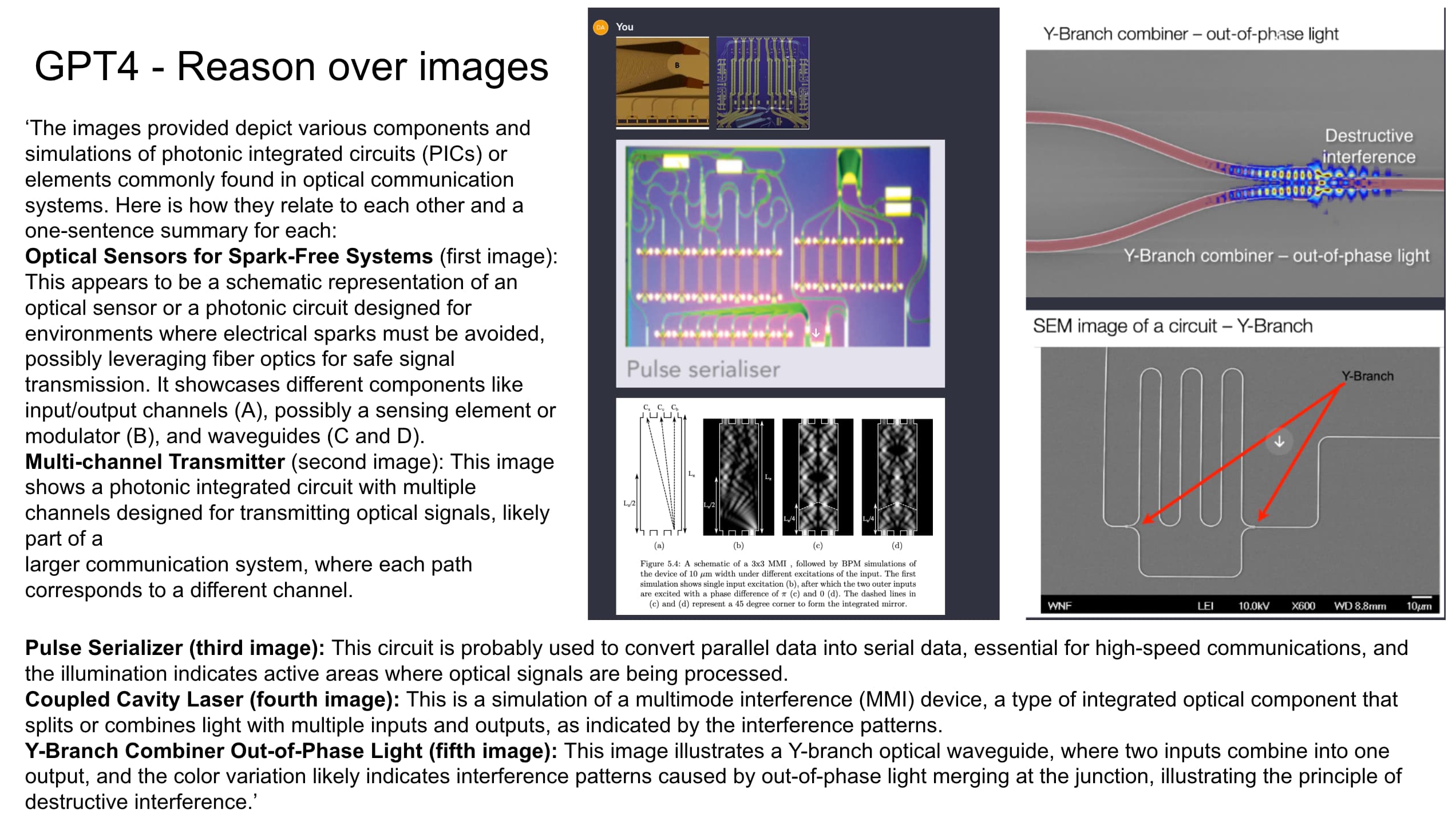

I have worked with AI and machine learning since 2018, keeping a close eye on the changing landscape. In 2022 when ChatGPT was released I was testing the model and the API with language tasks and then when the vision models were starting to be released I started with those. The test’s I ran were with various open and closed models to see their understanding in both one shot tasks and in context learning to try to understand the strengths and weaknesses of the underlying training data. I had spent time at SMART Photonics doing manual vision tasks due to small unpredictable production runs in a lab to fab setting, where we would have novel issues arise with every production run due to changing materials and processes. This meant that it was very difficult to automate, but I knew the processes and the available data that is inside one company of this type. Meaning if there was an issue with data, it’s essentially solved within closed silos.

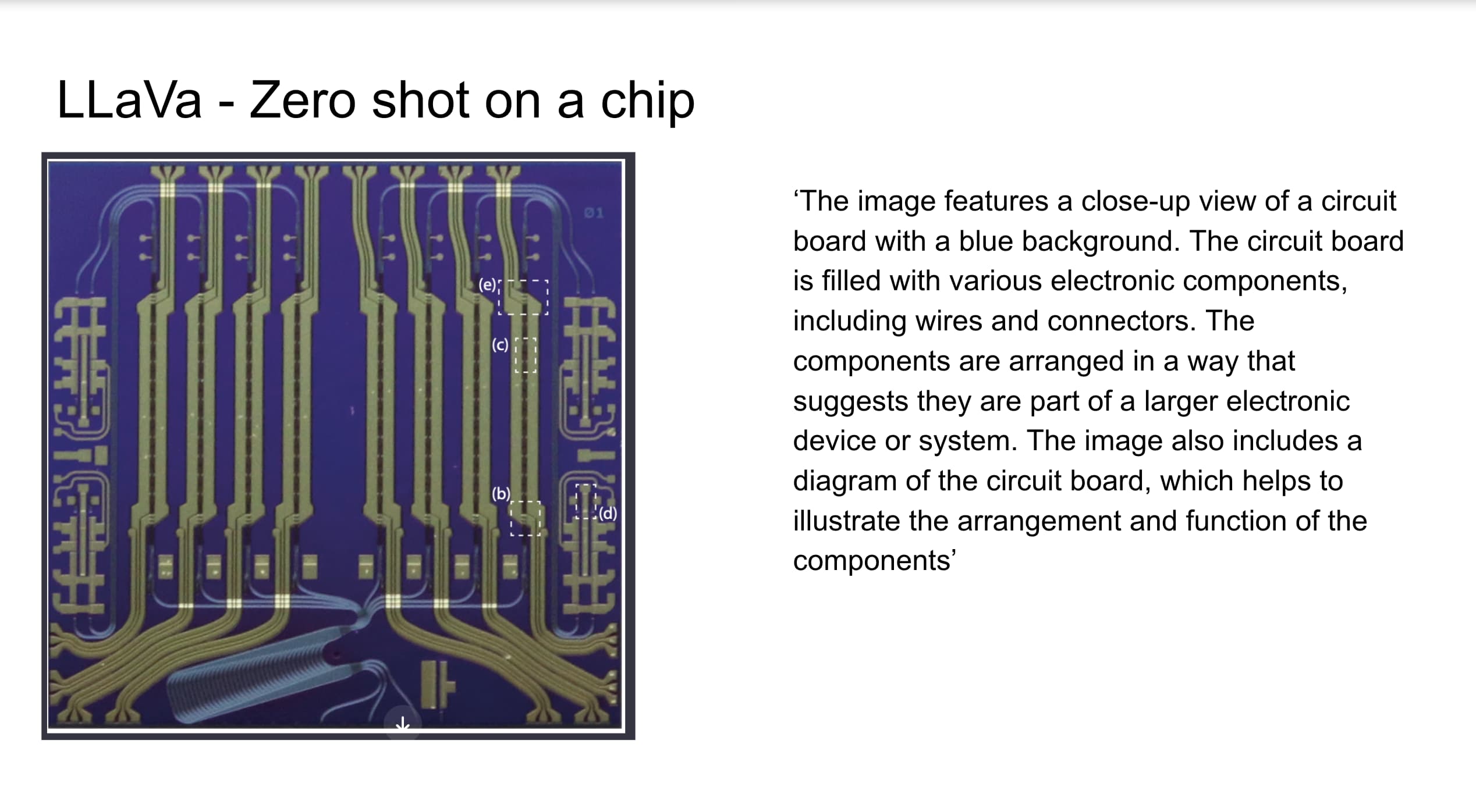

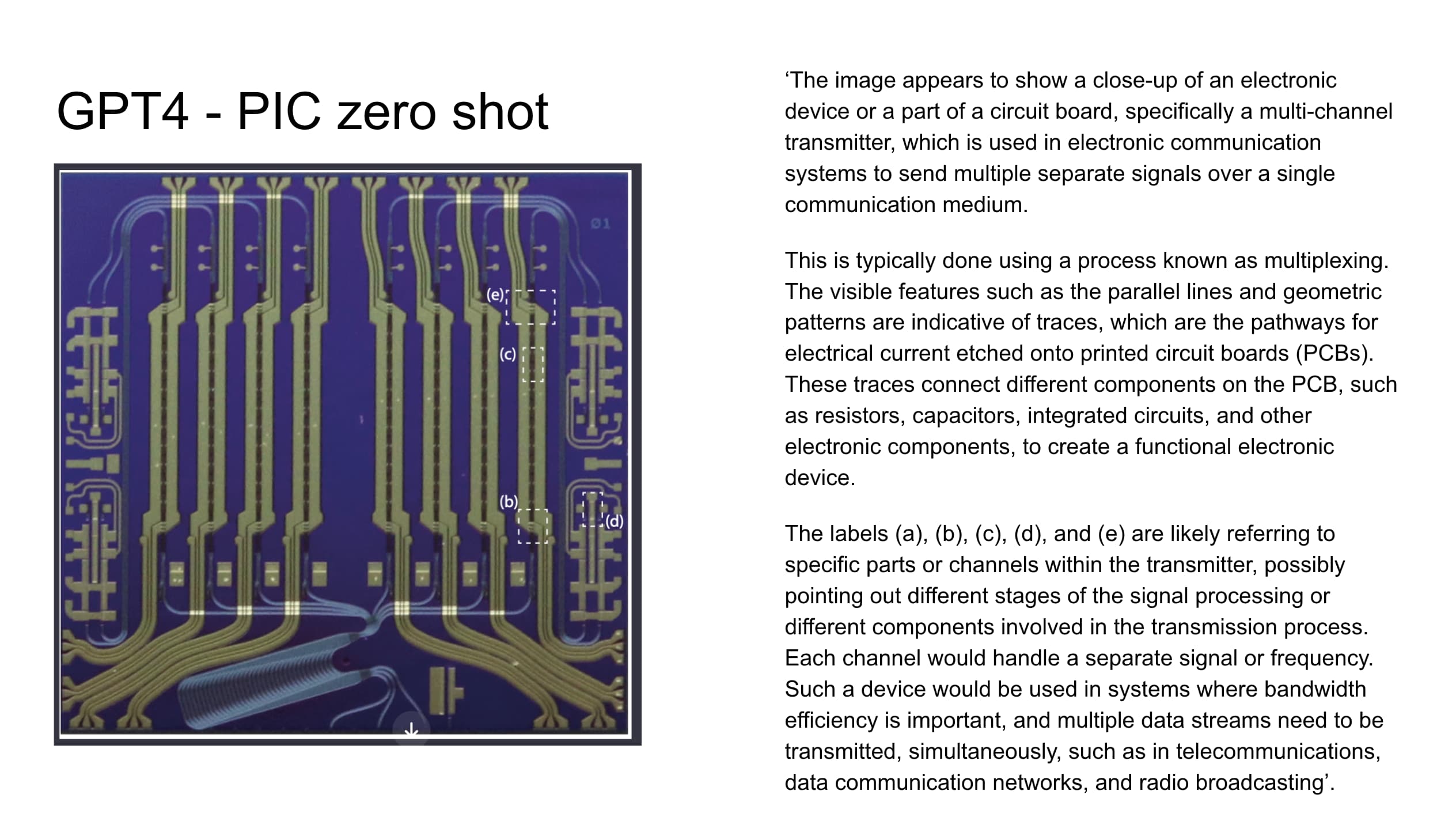

LLaVa 13b, small vision model one shot

GPT4 Vision zero shot

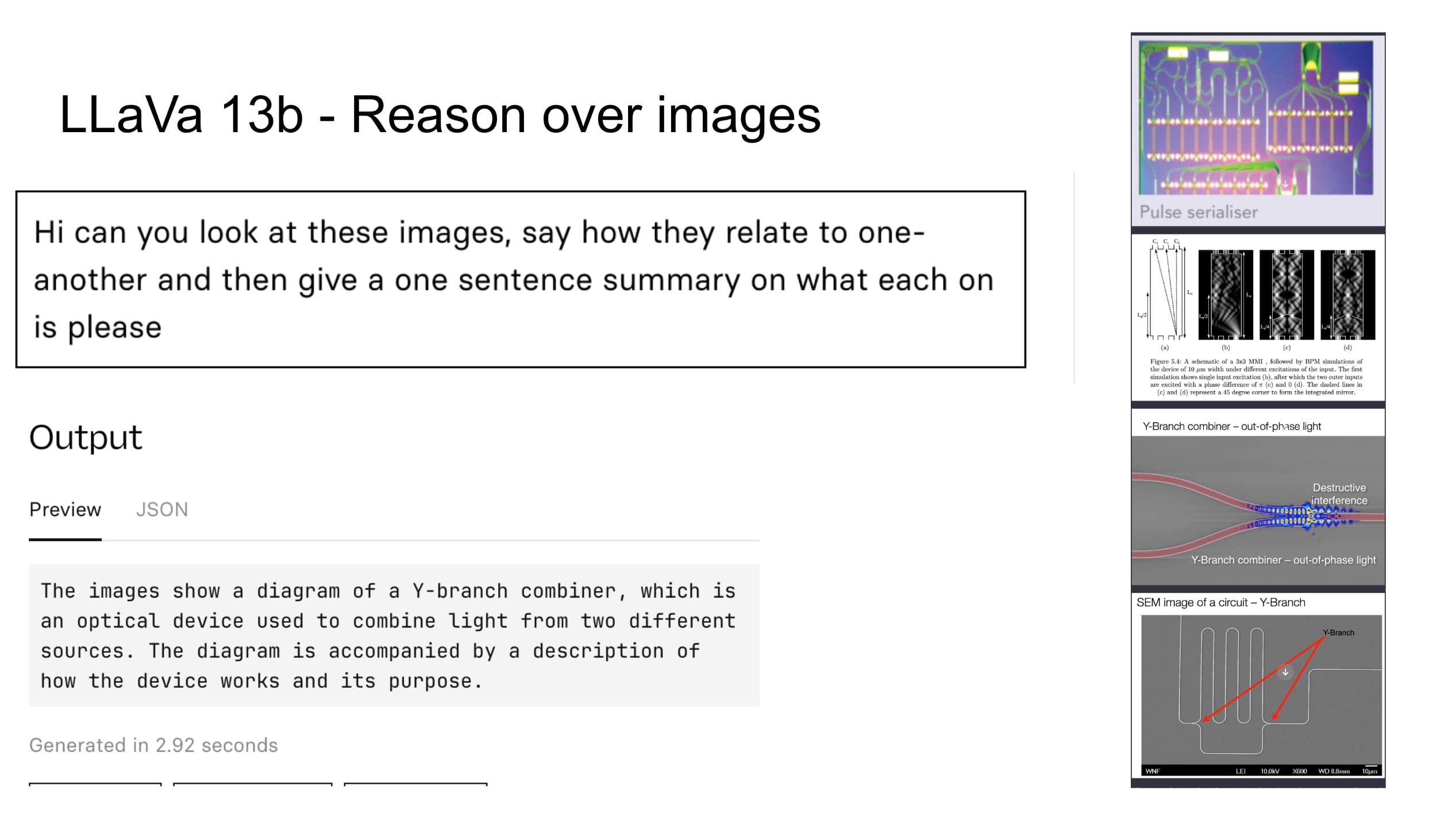

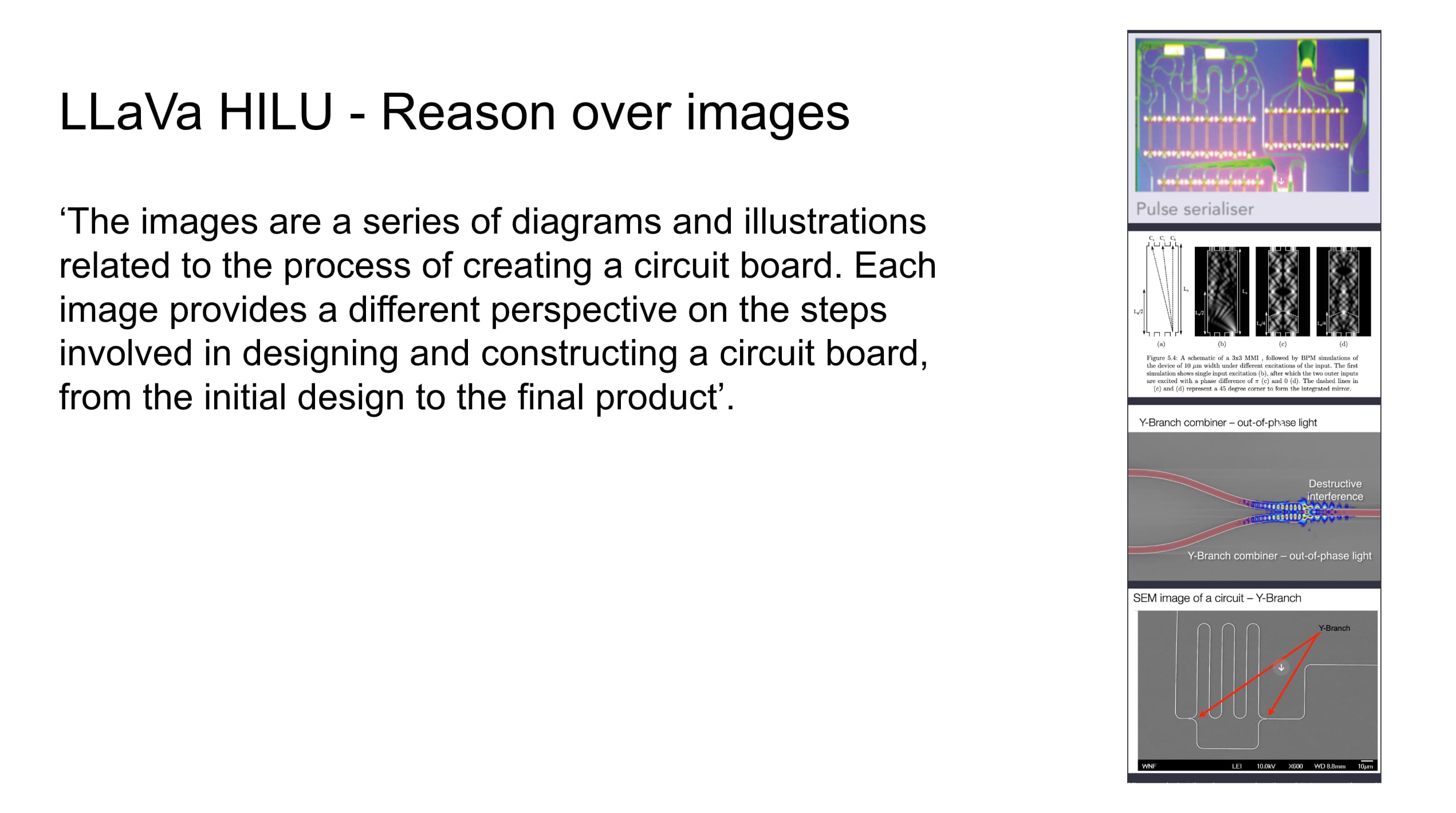

LLaVa 13b in context learning

LLaVa HILU (7b) in context learning

Google Bard 2023 in context learning

Note the level of detail both models are able to go into, as well as the way they structure and present their output. The ability to specifically follow instructions improved, with one weakness being that they were very verbose in their output. A problem that still persists, meaning the user must specifically ask the model for concise output.

GPT4 Vision in context learning

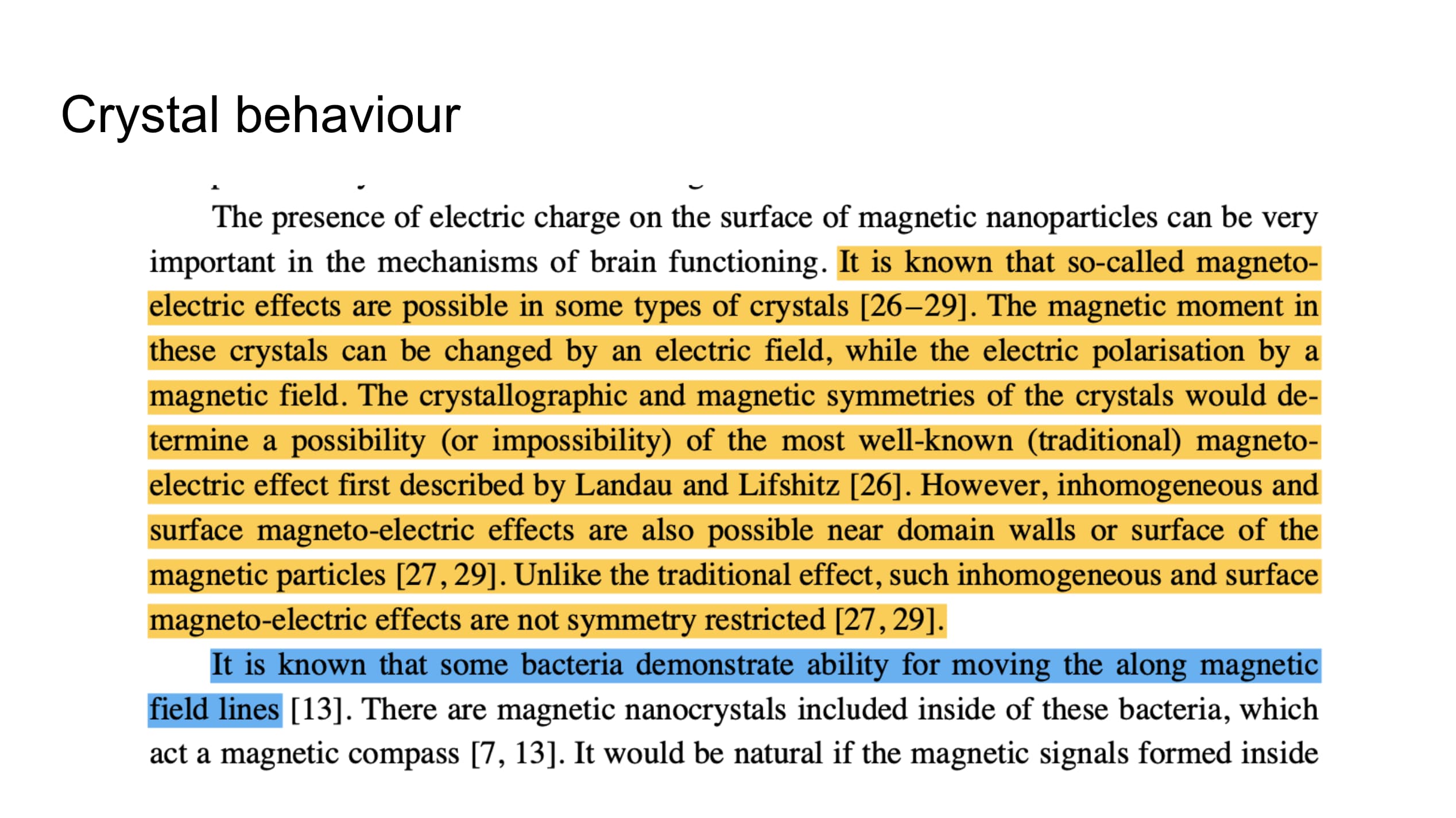

Magnon - Understanding design patterns in nature

The following pages show excerpts of emerging knowledge of biogenic magnetite. Outputs from LLMs using retrieval of new knowledge is still ‘polluted’ by the enormous amount of base knowledge in medical research (Parkinson's disease and dopamine/neuromelanin). There is a bias towards dysfunction and breakdown of materials.The behaviour of these materials is polluted by the overrepresentation in training data about magnetite in bacteria as related to the earth’s magnetic field. These design patterns should be individually understood, like other differences and similarities between human and plants (phytomelatonin vs melatonin and phyto serotonin vs serotonin). At the time LLM’s could not seem to differentiate well or reason about emerging or sparse knowledge in intelligent ways.

Magnetic nanoparticles and bacteria

Early research on biogenic magnetite in neuromelanin

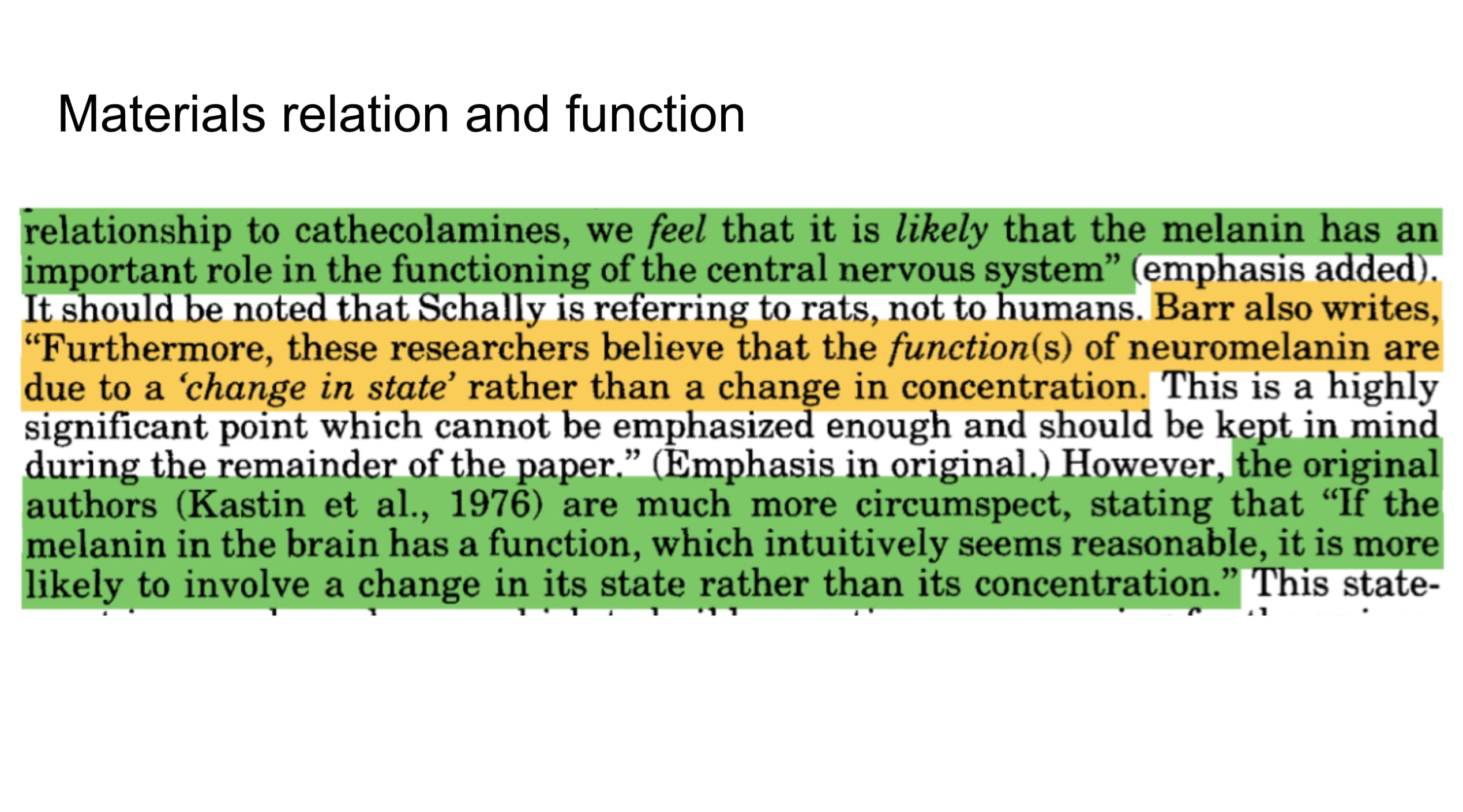

This research continues on the signalling that's potentially involved in biogenic magnetite in the brain, not going much into the material composition. The dominant themes in research were reflected in the outputs of models of all sizes, emphasising both bacterial maganetie and signalling rather than materials.

Early research on biogenic magnetite in neuromelanin

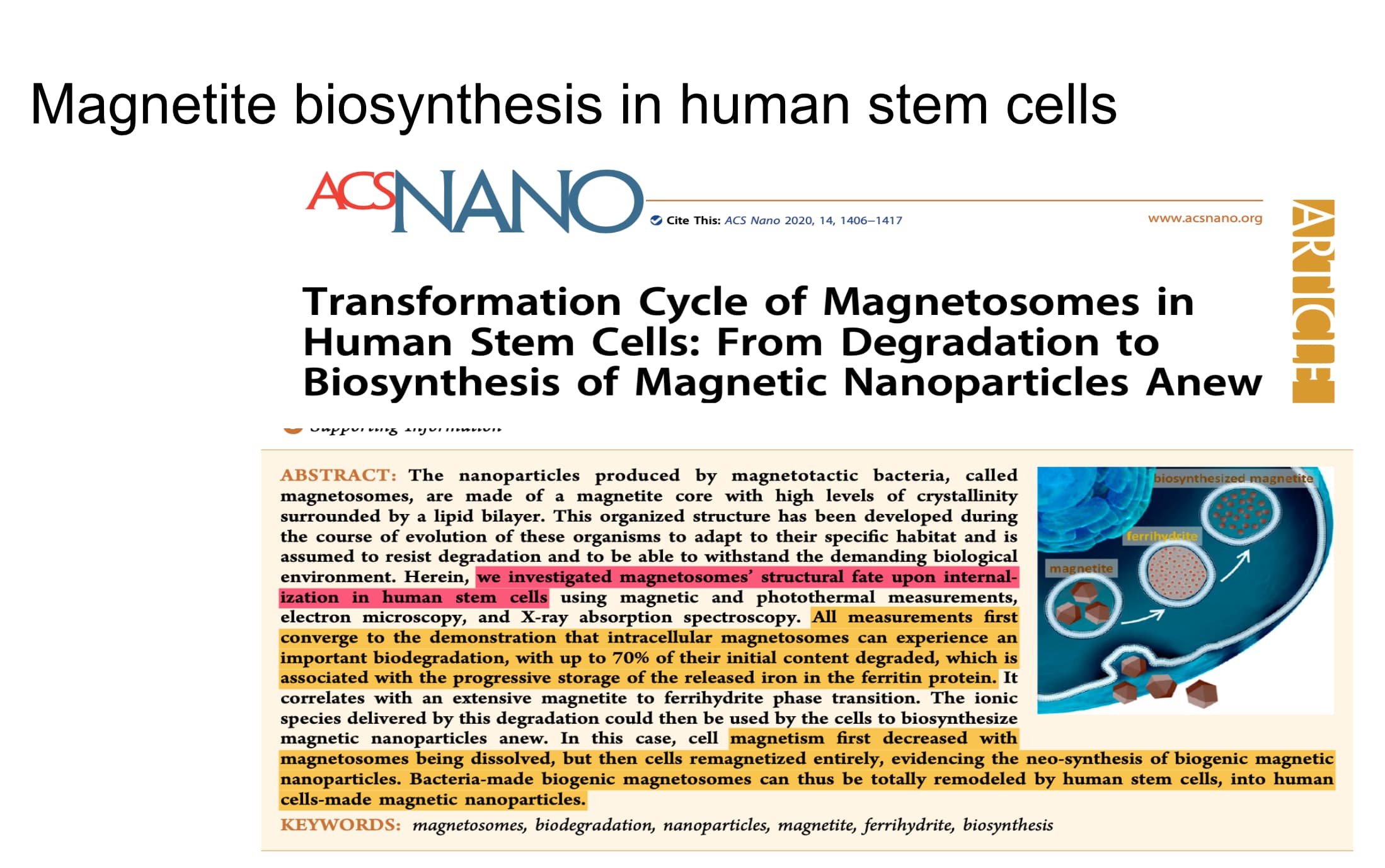

It took a lot of manual research to find the gaps in LLM knowledge. It was time consuming, using Google search and various academic sites directly but eventually specific search terms made it easier to find novel things related to any research topic. The image below is a paper about the material composition of anthropogenic biogenic magnetite in the brain. A very detailed report on in vitro breakdown and buildup of magnetosomes by human stem cells, showing that the deign and function of this magnetite is specific to the human brain and differs completely from the bacterial kind that’s used for magnetic field alignment.

Human stem cells in the breakdown and build up of biogenic magnetite

A significant portion of the research literature is also medical in nature, so a lot of outputs were related to neurodegenerative disease and the buildup of different forms of magnetite in the brain related to pollution and the ability of melanin to bind to metals. That function is thought to be protective, occurring with ageing, but it tells us nothing about, and obscures the function of healthy endogenous magnetosomes in the brain.

Memory and magnetic fields

Following the composition and make up of the healthy material in addition to understanding the brain as the materials break down, as with neurodegenerative disease gives a better idea about function. For example the build up of specific materials is associated with both coordination and with higher brain function in children. As those materials are lost to disease the opposite can be seen with a breakdown in higher brain function and coordination with the loss of magnetic material in an organ that relies on electromagnetic coordination and integration to function. Even after the breakdown in materials harmonic devices can enhance function with various electronic, magnetic and musical devices and instruments being examples, such as when dementia patients are played their favourite music.

intergenerational iron 'memory' in bacteria

Once the specifics of behaviour are understood in one organism then it gets easier, or becomes a little more obvious that you can draw conclusions about similar behaviours. The paper above investigates the idea of iron, magnetism and ‘memory’ in simple bacterial cells over generations

LLM’s have advanced compared to where they were in 2023 by a long way, and now they have deep research and other agents methods for doing complexed research. Whether they can find and come up with novel scientific discoveries taking into account new or sparse data still seems unlikely because its the training data that makes the model. That much is still true. Language models will be great tools, helping us with breakthroughs but it’s my humble opinion that the largest breakthroughs are still likely to be made by extraordinary humans, or to be the result of visionary humans using language models directly, or providing the data over time that allows the model’s world view to be updated enough to recognise the novel discovery by crossing a threshold in data, or using improved needle in a haystack methods. The linear nature of compute vs the quantum nature of the human brain is also something I see as a bottleneck.

The behaviour follows the materials and I think the ubiquity of quantum sensing and quantum computing alongside analogue computing is where we will see true artificial intelligence. Both at scale in data centres and when these machines can be embodied in multi sensory (multimodal) general purpose robots. We will then see intelligence we can recognise that surpasses us in every sense because it has a wider spectrum of inputs across touch, sound and vision for example. It will be interesting to see over the years if we can get embodied computers to run on the same amount of energy as a human body and brain. Then we could be said to have true AI in the anthropogenic sense because we will have solved the problem of completing tasks with the least possible energy, mastering the transformation of energy as nature has done with us, from the amount of power our brain uses, to having less steps in the synthesis of vitamin d by being hairless, which also allows us to cool off by sweating instead of panting. This gives us humans a level endurance that is rarely seen in the animal kingdom making us successful persistence hunters that are well adapted to hot climates.